Table-valued parameters have important considerations that developers and DBAs need to be aware of. It is essential that application code specify the proper data type and length for TVP columns in order to achieve optimal performance and reduce unnecessary overhead. Additionally, a trace (Extended Events, server-side SQL Trace, Profiler) that captures RPC events of an inappropriately defined TVP can not only exacerbate performance issues, but affect stability of the SQL Server instance in some cases.

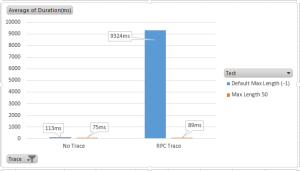

The graph below summarizes the impact the application code max column length specification can have on performance, without and with a trace running. All tests used the same table type of 10 varchar(50) columns and a 10,000 row TVP rows passed via a DataTable object of 10 string columns. The only variables were the max column length specified by the app code and a trace running on the database server. The client application was run on a different machine than the database server and elapsed time measured by the application.

Figure 1: Impact of TVP maximum column length specification with and without tracing

The average duration was 113ms without a trace running when the app code used the default -1 max column length. However, when max column length 50 was specified (matching the varchar(50) column of the table type), the average duration dropped significantly to 75ms. The trivial code change of specifying the string column max length of 50 improved performance by 33%.

I then ran the same pair of tests while a trace captured the RPC completed events. The average duration of the default max length test increased from 113ms to 9.324ms with the trace running, an over 80x degradation in performance! The test with the explicit 50 character max length was not nearly impacted as much, increasing from 75ms to 89ms (which is tolerable, IMHO).

TVP Internals

Under the hood, TVP data are passed to SQL Server over the Tabular Data Stream (TDS) protocol. The client API sends TVP column meta-data to SQL Server describing the data type, length, and other meta-data for each TVP column followed by data rows with each column in native format matching the preceding data type specification. SQL Server uses the provided column meta-data to prepare and fill buffers for efficient processing on the server side, leveraging native types to eliminate parsing overhead similarly to other parameterized queries.

Before a query or stored proc with a TVP starts executing, SQL Server creates a table in tempdb with the same schema as the parameter table type and uses bulk insert internally to efficiently load the table with TVP rows streamed by the client application. The size of a TVP is constrained only by available tempdb storage. SQL Server executes the query/proc after the TVP temp table is loaded and the parameterized T-SQL query/proc can then use the TVP data.

The TVP columns provided by the client application do not have to match the schema of the target table type; SQL Server implicitly converts TVP values to match the target table type column when data types differ. Although not optimal, implicit conversion is generally not a major factor in overall TVP performance.

The application-specified TVP max column length can impact performance significantly, and in some cases dramatically, as illustrated by the performance tests shown earlier. SQL Server prepares to receive TVP data up to the max length specified by the client application rather than the defined size of the target table type column. When the specified max length of variable length columns exceed the 8000 byte tipping point, SQL Server uses a different code path to allow for large object (LOB) values up to 2GB. Unless the table type actually contains LOB values (varchar(MAX), nvarchar(MAX)), database server resources are wasted unnecessarily when an inappropriate max column length is specified.

LOB values are especially problematic when a trace captures the RPC completed event of a TVP query. Tracing uses memory from the OBJECTSTORE_LBSS memory pool to build trace records that contain TVP LOB values. From my observations of the sys.dm_os_memory_clerks DMV, each LOB cell of a TVP requires about 8K during tracing regardless of the actual value length. This memory adds up very quickly when many rows and lob columns are passed via a TVP with a trace running. For example, the 10,000 row TVP with 10 LOB columns used in the earlier test required over 800MB memory for a single trace record. Consider that a large number of TVP LOB cells and/or concurrent TVP queries can cause queries to fail with insufficient memory errors. In extreme cases, the entire instance can become unstable and even crash under due to tracing of TVP queries.

Specifying Proper TVP Parameter Column Meta-Data

A SQL Server development best practice has long been to use strongly-typed parameters with attention to detail regarding the parameter data type and length such that it is consistent with the types on the server. This practice improves performance by avoiding implicit data type conversions, promotes sargable expressions, avoids unnecessary procedure cache bloat, and inherently validates data for proper typing on the client before it is sent to the database server. With scalar parameters, one need only specify the correct SqlDbType along with the proper length, precision/scale (avoiding AddWithValue method to add parameters) and all is well in the world.

TVP parameters require additional column meta-data not applicable to scalar parameters. The parameter data type of a TVP in .NET is always SqlDbType.Structured. The additional TVP column meta-data is inferred from the supplied parameter value, which may be a DbDataReader, IEnumerable, or DataTable object. These objects inherently contain column meta-data and methods enumerate rows, which the SqlClient API uses to send the TVP to SQL Server.

DataTable objects are most commonly used as TVP values. DataTables are easy to use and can serve as containers for data beyond just TVP usage. But unlike DbDataReader and IEnumerable objects, a big gotcha with a DataTable is that the default data type String with maximum length of -1 (2GB LOB). This is the .NET equivalent of the SQL Server nvarchar(MAX) data type and has many insidious and negative implications with a TVP. First, values of types other than string that are added to a DataTable string column will be converted to string (DateTime, Integer, GUID, etc.). Consequently, using the default DataTable column string data type for non-string types will:

• increase client memory requirements compared to more compact native types

• incur conversion overhead

• prevent strong-typed data validation on client side

• require date format aware formatting of date and datetime values

• require using a period as decimal separators

• increase network usage compared with smaller native types

When String is the proper column data type, developers must be especially mindful of the max length specification when the DataTable is used as the TVP value. Strings in Windows and .NET are Unicode, requiring 2 bytes per character. This means a max length of over 4000 characters will cross the 8000 byte threshold for LOB data on the server side regardless of the table type on the server. Avoid using long string columns in TVPs when many rows are passed and never use the default -1 length unless a MAX type is actually intended.

DBAs who support applications that use TVPs should be aware of the repercussions tracing can have on performance and SQL Server memory. Avoid capturing TVP RPC completed events of large TVP requests, if possible. When tracing TVP RPC completed events, monitor the OBJECTSTORE_LBSS memory pool for excessive memory usage.

Acknowledgements

I’d like the thank SQL Server MVP Ola Hallengren for his suggestion for me to write this article.